Executive Overconfidence Meets Opportunity in AI

It’s hard to swing an engagement hook without hitting another hot take on AI these days, but one I see very few people talking about is which industries should be focusing on which kinds of AI.

Highly regulated industries, such as healthcare, insurance, or finance, should be focusing even more on AI than the average business. Further, they should be aware that there’s a tendency toward overconfidence among execs, and the lowest-hanging fruit in AI–chatbots–probably aren’t going to yield the big wins they’re looking for. Let’s get into it.

Why AI for healthcare, insurance and finance?

One way to figure out which companies should invest in a new technology first is to go where the demand is. However, when we’re talking about new technologies that consumers don’t fully understand, such as AI, it can be difficult to accurately measure demand.

Current data shows wildly mixed reactions from consumers around AI. A 2025 University of Melbourne/KPMG study found that globally, two thirds of consumers se AI with 83% believing AI will provide benefit. Also this year, the Ipsos annual AI study found that 64% of Americans say AI use makes them nervous. Statistically, that means that there’s at least some overlap between those who use AI and those who are nervous about it.

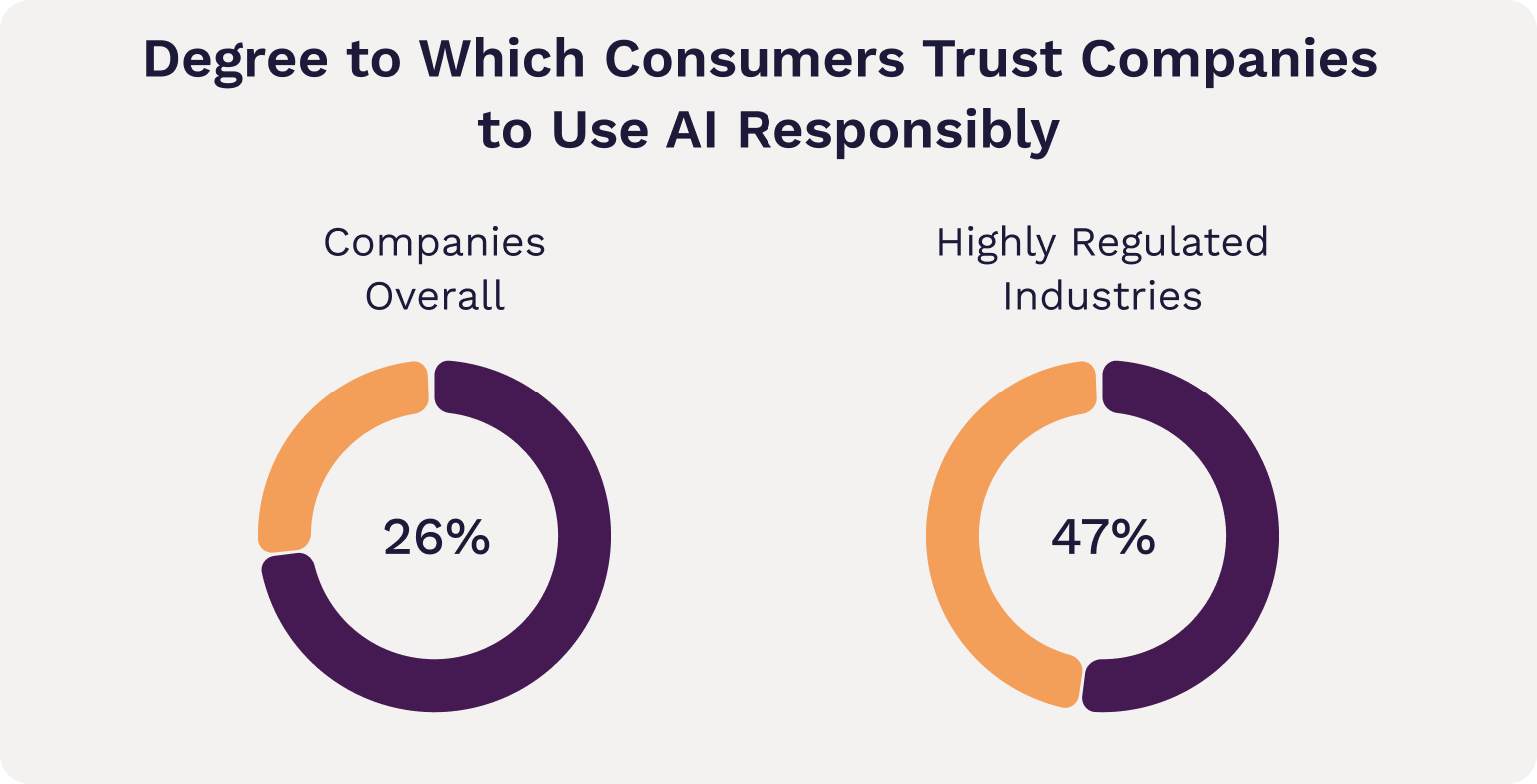

The nervousness seems tied to a lack of trust in brands (unsurprising given the current state of customer loyalty). Another study by Qualtrics this year found that only 24% of U.S. consumers trust brands to use AI responsibly. But the results change dramatically when we narrow to specific industries.

Specifically, healthcare and finance organizations enjoy 47% and 42% trust respectively, nearly double the trust placed in brands as a whole. This presents a golden opportunity – trust is higher than normal, interest is there, and the opportunity is ripe for innovative brands to capitalize.

Haven’t we already done that?

But wait, you might say. Isn’t this already happening?

Executives everywhere know they need to invest in AI, and they’re taking action. Interestingly, a June 2025 MIT Technical Review publication shows that 48% of execs currently believe they are ahead of the competition in deploying AI, with only 13% believing they are behind.

This reminds me a bit of the classic statistic from AAA that 8 out of 10 men believe they are above average drivers, which is of course, a statistical impossibility.

Could the 48% of execs who believe they are ahead of the competition be correct? Only in the narrowest possible definition of the word. If you assume that it were possible to score organizations’ AI maturity objectively, and we also assume that each organization has a unique score ranking them individually (no duplicate scores or ties), and you average those scores, then yes, barely. One organization will be closest to the average, and everyone with a higher score than that will be above it, almost certainly giving us slightly below 50% of the total number of organizations who are above average.

However, that’s only with a very specific, favorable definition that doesn’t reflect how statistics are used in practice. A much more normal (yes, I’m leaving that pun) way to interpret ‘above average’ would be to define a range of similar scores that represents the average, centralized on a bell curve. Say for the sake of argument we assume 1 standard deviation from the mean is the average range. That encompasses 68% of results in a normal distribution, with 16% outside on the low end and 16% outside on the high end. In other words, about two thirds of the 48% who believe they’re beating the competition are suffering from a fantasy, the 13% who believe they’re behind are probably right, and there’s another 3% somewhere who aren’t aware that they’re behind.

All statistical talk aside, what this points to is a general overconfidence in AI superiority among executives. And that’s dangerous, because consumers expect more.

Why the overconfidence, and the problem with chatbots

I have a theory on where this overconfidence is coming from.

In the same 2025 EY/MIT survey of 250 executives from regulated industries (finance, health, insurance, etc.), 72% report deploying conversational chatbots.

I get it. With the rise of productized offerings in the space, chatbots quickly emerged as the fastest way to deploy an AI solution to appease boards and investors hungry for evidence of AI innovation. The productized offerings often include a solution for the thorny privacy and security considerations that make brands wary of venturing into deep AI waters. These concerns are especially important in highly regulated sectors where trust is paramount and regulations are strict. Chatbots seemed like the natural play. And they allowed companies to begin getting used to AI without a huge runway. This is a positive step.

And yet, chatbots are not taking off the way many expected. Consumers use them for routine tasks, but the impact is far short of transformational. Let’s look at why.

In order to achieve transformational change, you must address a problem that matters deeply to your customers. Chatbots today are trusted to perform routine procedures like checking balances and scheduling appointments, but not more sensitive tasks like making investment or healthcare decisions. Only 10% of U.S. patients feel comfortable with AI-generated diagnoses or chatbots making clinical decisions about their health. That means AI chatbots are being integrated into fringe, transactional requests that don’t have much emotional resonance or staying power. People schedule their appointments and forget the interaction happened–it doesn’t create lasting impact because it doesn’t solve a deep pain point.

Chatbots deployed in highly regulated industries also often lack the emotional intelligence displayed by popular consumer chatbots like ChatGPT. This further relegates them to the fringes of high-stakes interactions, only useful when emotions are low (and so is the potential for benefit).

Refocus AI on High-Value Problems

The biggest gains for healthcare, finance, and insurance won’t come from bolting on chatbots to handle routine tasks. They’ll come from applying AI where it can reduce complexity, improve accuracy, and build trust in moments that matter most—interpreting dense information, supporting high-stakes decisions, and personalizing user journeys in ways that strengthen loyalty. Those endeavors will require a heavier investment of time and resources, but the potential for transformation is dramatically higher.

The investment in chatbots was a good start. It allowed brands to experiment and develop some of the compliance and technical muscle needed to take on AI challenges. But chatbots are the bottom rung on the ladder.

Consumers in highly regulated industries already grant more trust than average. That trust is a rare advantage, and it can be either strengthened or squandered depending on how organizations deploy AI. Leaders who pair ambition with humility by focusing next on transparent, user-centered solutions that solve meaningful pain points will not only avoid the trap of overconfidence but also build lasting competitive advantage.